Everything about the Google Coral USB Accelerator

On one of our YouTube videos about artificial intelligence, a commenter wrote that they weren’t that interested in a mystery AI box. It inspired me to write an article unraveling the mystery. So here is everything you need to know about the Google Coral USB Accelerator.

The Coral USB Accelerator is primarily designed for low-power, edge AI applications.

It’s suitable for tasks like image and video analysis, object detection, and speech recognition on devices like Raspberry Pi or laptops.

Overview

In short, the Google Coral USB Accelerator is a a processor that utilizes a Tensor Processing Unit (TPU), which is an integrated circuit that is really good at doing matrix multiplication and addition.

Matrix multiplication is the stuff you need to build neural networks.

And you definitely won’t get very far if you try to build neural networks on your Raspberry Pi alone.

As such, the accelerator adds another processor that’s dedicated specifically to doing the linear algebra required for machine learning.

As you probably know, the idea behind machine learning is to develop models that analyze and learn patterns from a dataset. This dataset has various inputs and corresponding outputs. Then, once trained, these models can take new inputs, synthesize the learned patterns, and generate appropriate outputs or predictions.

TPUs work much faster and with much less energy than CPUs for machine learning, because they don’t run other programs and don’t need to access shared memory.

The TPU on the Coral USB Accelerator uses TensorFlow Lite, which is a modified version for smaller devices.

Using the Coral USB Accelerator

What’s especially great about it is how accessible it makes AI development.

Getting started is a breeze.

Really, all you need is a Google Coral USB Accelerator (obviously) and a computer with one free USB port and Python 3.5 or above.

And it’s worth mentioning that it works with Mac, Windows, and Linux (specifically, Debian-based operating systems, like Ubuntu or the Raspberry Pi OS). So you’re sure to get it running.

When you set it up, you’ve got to decide whether you want it to run at maximum clock frequency or reduced clock frequency. Obviously, maximum clock frequency is more powerful, but it’s also going to use more power. And it can get unbelievably hot.

Running an Image Recognition Model

On the Google Coral website, they offer a wonderful test to show you how the Coral USB Accelerator works. In their example, it is able to recognize different birds with pretty remarkable accuracy.

So here’s how you run the image recognition model on your Raspberry Pi. This will work perfectly well with the reduced clock frequency.

Don’t plug in the accelerator just yet.

First, you need to go to terminal and type in the following code to add the Edge TPU repository:

echo "deb https://packages.cloud.google.com/apt coral-edgetpu-stable main" | sudo tee /etc/apt/sources.list.d/coral-edgetpu.list

curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

sudo apt-get updateThen install the runtime:

sudo apt-get install libedgetpu1-stdNow you can plug in the accelerator so everything is in sync. Once you’ve got it hooked up, you need to install PyCoral:

sudo apt-get install python3-pycoralAnd now you can get the model up an running. First, you download the code:

mkdir coral && cd coral

git clone https://github.com/google-coral/pycoral.git

cd pycoralThen run the following:

bash examples/install_requirements.sh classify_image.pyAnd finally, you run the model:

python3 examples/classify_image.py \

--model test_data/mobilenet_v2_1.0_224_inat_bird_quant_edgetpu.tflite \

--labels test_data/inat_bird_labels.txt \

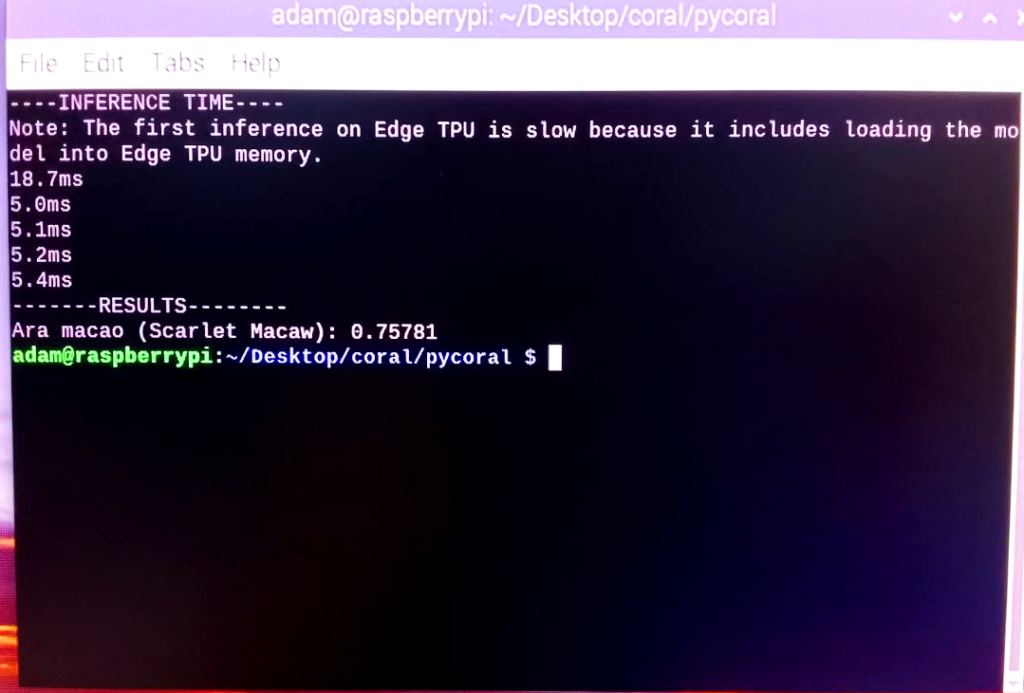

--input test_data/parrot.jpgThe output looks something like this:

You can see that the input was “parrot.jpg” and the model correctly read it as a Scarlet Macaw, with .75 confidence in accuracy.

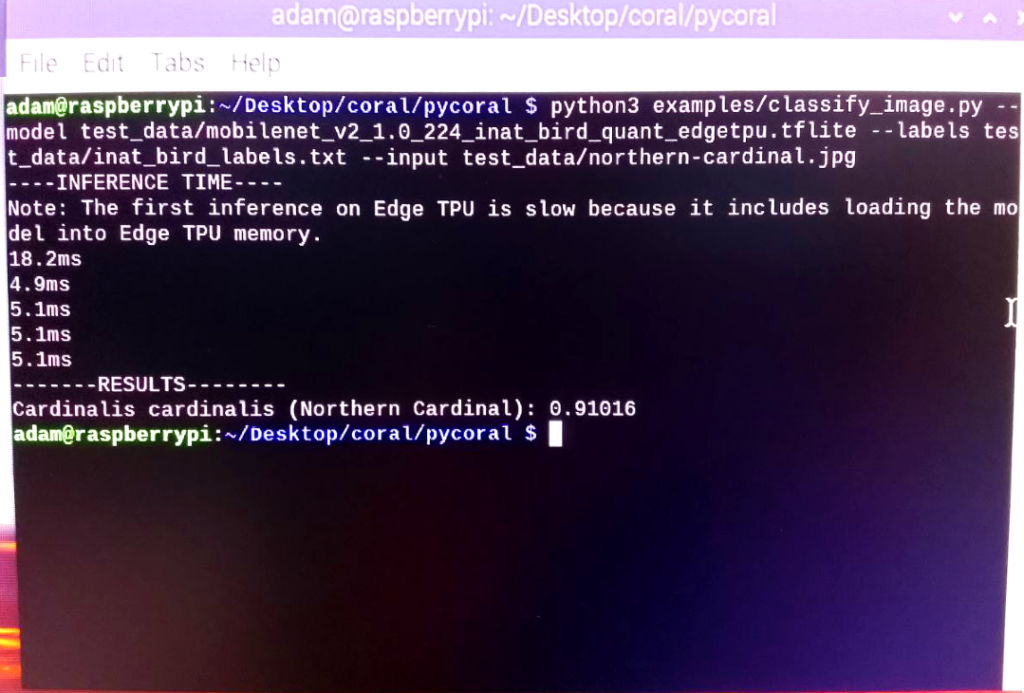

I found a picture of a Northern Cardinal and ran it through the model and here’s what it gave me.

As you can see, it gave a super accurate reading with .91 confidence.

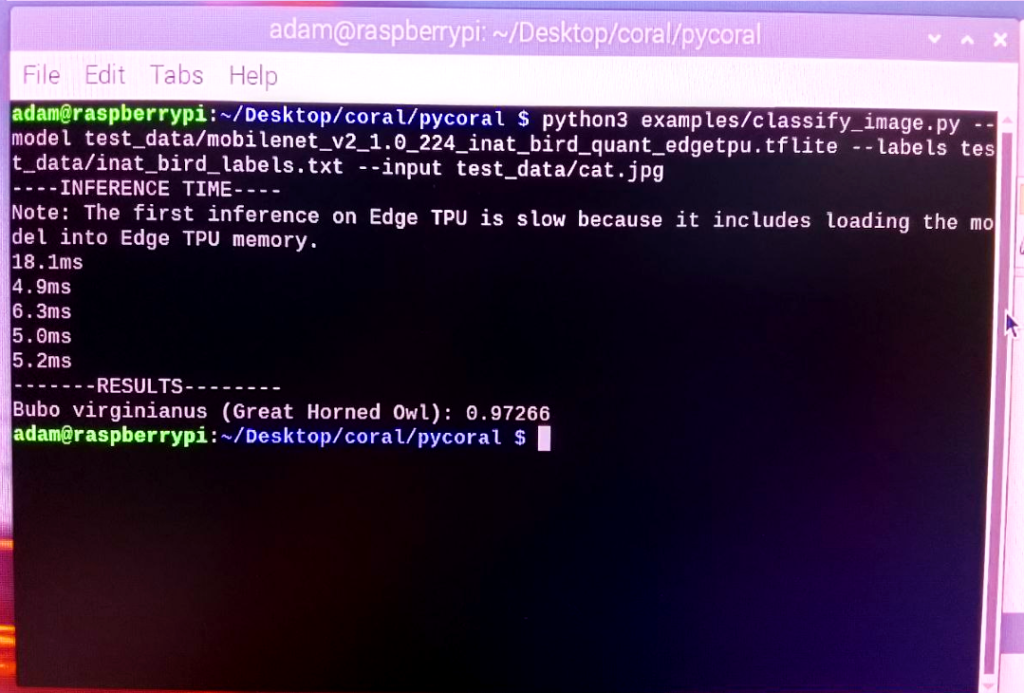

So then I wanted to see what would happen if I fed it a picture of a cat.

Although I totally understand why it thought the cat was a Great Horned Owl, I was surprised by it’s level of confidence.

I tried to get it to recognize pictures of people and determine which birds they were, but unfortunately it wouldn’t cooperate.

But please feel free to experiment and see what you can pull out of it. Comment below with the results!

Conclusion

So there you have it. While it’s ultra-sleek exterior might give some sense of mystique, the Google Coral USB Accelerator is no mystery box.

It is a powerful processor that can bring AI to edge devices.

So, whether you’re into IoT stuff, robotics, or you’ve got an idea for bringing AI into everyday devices, the Coral USB Accelerator is your ticket to ride. Although I gave you the example of an image recognition model, the accelerator can go much further – video recognition, object detection, on-device transfer learning, etc.

We also have an AIY Maker Kit that makes all of this possible – check out this video:

If you’re interested in AI, you can check out our Introduction to Artificial Intelligence.

And if you’re interested in making some AI projects yourself, you can check out some projects that make use of AI in our Paragon Projects series.

What are you planning on doing with the accelerator?

lubie jak w artykule są przykłady z życia wzięte. dobry fachowy artykuł 🙂

Dzięki!

hola, tenés idea si está placa USB sirve para acelerar una PC que tenga instalado algún GenAI como ollama ? con algún modelo libre como llama 3.1 o Gemma? usando ollama ? gracias

“commenter wrote that they weren’t that interested in a mystery AI box” – I guess the commenter did express any specific reasons.

The most concerning reasons are the lack of provenance from ML outputs and the inability to provide such for possible regulations.

Where one runs an ML provides nothing for this.

correction:

“commenter wrote that they weren’t that interested in a mystery AI box” – I guess the commenter did not express any specific reasons.

The most concerning reasons are the lack of provenance from ML outputs and the inability to provide such for possible regulations.

Where one runs an ML provides nothing for this.

Interesting point, David.

а что быстрее: GPU or TPU???

Для искусственного интеллекта, TPU. Безусловно, быстрее.

It would be great if it didn’t disconnect after an hour and require either a reboot or unplug and plug to get it working again.

Посмотрите опенсорсный frigate NVR, особенно последнюю beta3